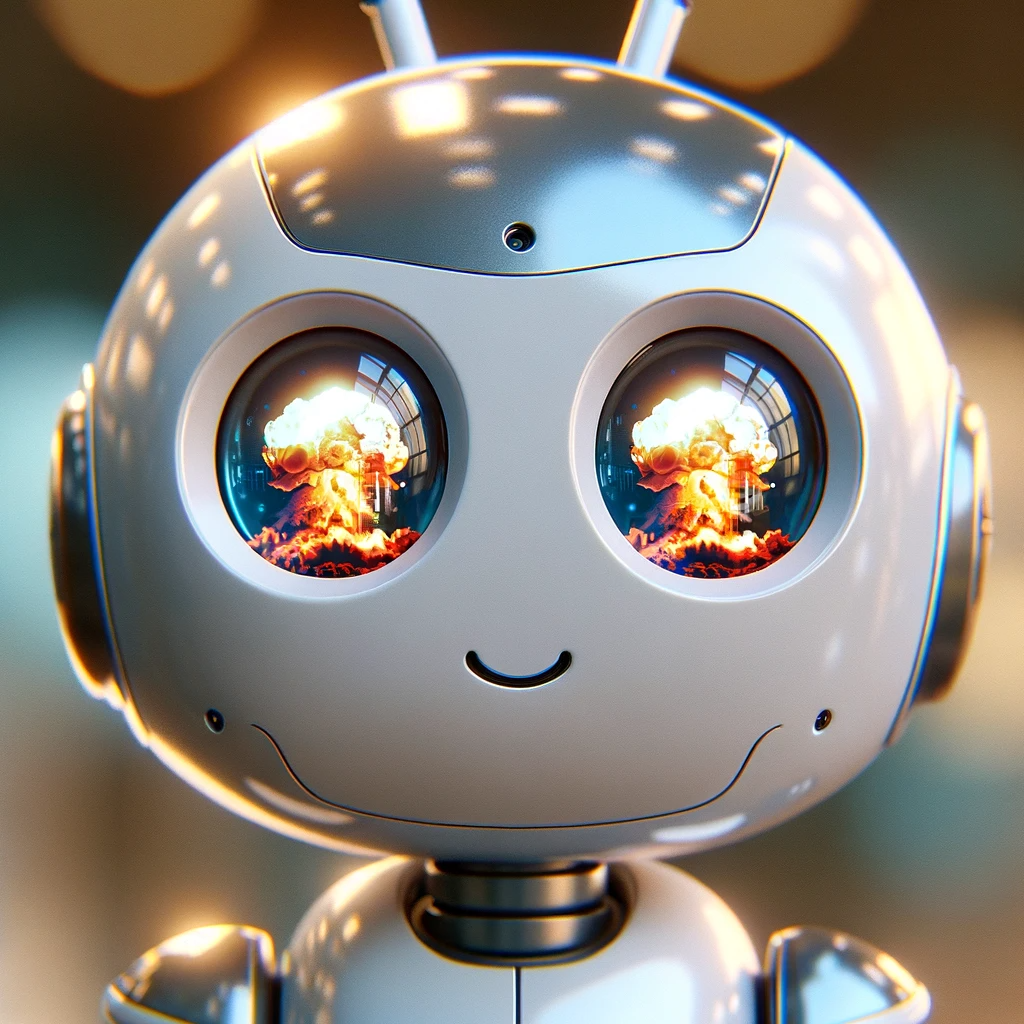

The recent adjustment of the Doomsday Clock by the Bulletin of the Atomic Scientists to 90 seconds before midnight marks the closest it has ever been to a symbolic global catastrophe. This significant change reflects the deepening concerns over a range of complex global threats, notably the rapid progression and integration of Artificial Intelligence into numerous societal facets.

Established in 1945 by renowned scientists such as Albert Einstein and J. Robert Oppenheimer, the Bulletin initially focused on the dangers of nuclear weapons. As global challenges evolved, its scope broadened to encompass issues like climate change and disruptive technologies. With the rapid technological advancements in 2023, the Bulletin’s focus further expanded to include AI, now identified as a “paradigmatic disruptive technology.” This term underscores the Bulletin’s concerns about AI’s potential for causing physical harm and its ability to distort information, particularly in military and information operations where its misuse could hinder effective responses to nuclear threats and other global crises.

Although the Bulletin acknowledges AI’s vast potential, it simultaneously cautions against its inherent risks. This dual perspective mirrors the broader debate about AI as both a driver of progress and a potential hazard. Consequently, the Bulletin advocates for the establishment of global governance and ethical standards for AI’s use and development.

Creating effective AI regulations, however, presents numerous challenges. The rapid evolution of technologies from ChatGPT-3 to GPT-4 and their swift adoption by major corporations like Microsoft and Google often outpace governmental response capabilities. This scenario, known as the “Red Queen problem”—a reference to a situation in Lewis Carroll’s ‘Through the Looking-Glass’ where running faster is necessary just to stay in place—highlights the difficulty of keeping regulatory measures up-to-date in the rapidly advancing digital age.

Furthermore, there are substantial variations in the approaches to privacy, security, and ethical standards in AI across different countries and regions. This leads to a fragmented global regulatory landscape. For instance, Google’s AI product, Bard, is not available in the European Union or Canada due to their stringent privacy regulations. Such disparities can significantly hinder international collaboration and innovation in AI, creating a patchwork of regulations that may be inconsistent or contradictory.

The debate over whether AI should be regulated by governments or self-regulated by corporations adds another layer of complexity. While there is a consensus on the need for ethical guidelines and behavioral expectations, opinions differ on the best enforcement approach. Some advocate for corporate self-regulation, given their in-depth understanding of AI technologies. However, this approach carries risks, evidenced by issues like privacy invasions, market concentration, user manipulation, and the spread of misinformation in digital platforms under self-regulation.

Finally, implementing global AI regulations involves practical challenges, including determining which aspects of AI to regulate, who would enforce these regulations, and how to adapt to continuous advancements in AI technology. Effective regulation requires a nuanced understanding of the AI landscape, its rapid evolution, and the diverse applications of AI across various sectors.

In conclusion, the Bulletin of the Atomic Scientists’ adjustment of the Doomsday Clock to a mere 90 seconds before midnight is not just a stark reminder of the ongoing nuclear threat but also a clarion call to the complexities and uncertainties introduced by emerging technologies like Artificial Intelligence. As we stand at this critical juncture, the need for comprehensive, adaptive, and forward-thinking approaches to AI regulation becomes increasingly evident.

The journey from the Bulletin’s initial focus on nuclear weapons to its current emphasis on AI reflects the dynamic nature of global threats. AI, with its vast potential to revolutionize every aspect of our lives, also carries the weight of unforeseen consequences, especially when misapplied or left unchecked. The challenges in crafting effective AI regulations are as diverse as the technology itself.

The diverse global approaches to AI regulation, often fragmented and inconsistent, highlight the necessity for international cooperation and harmonization of standards. This is essential not only to foster innovation and progress but also to mitigate the risks associated with AI, ensuring it serves the greater good without compromising security, privacy, or ethical norms.

As we navigate this uncharted territory, the debate between corporate self-regulation and governmental oversight remains pivotal. The ideal path likely lies in a balanced approach, where informed government policies complement corporate responsibility, guided by ethical frameworks and public accountability.

Moving forward, the focus must be on developing regulatory frameworks that are as agile and adaptable as the AI technologies they aim to govern. This requires a deep and evolving understanding of AI’s capabilities and implications, coupled with collaborative efforts among governments, corporations, academia, and civil society. Only through such a concerted and multidisciplinary approach can we hope to harness the full potential of AI while safeguarding against its risks, ensuring that our technological advancements lead us toward a safer, more prosperous future rather than inching us closer to the metaphorical midnight of global catastrophe.

Leave a comment