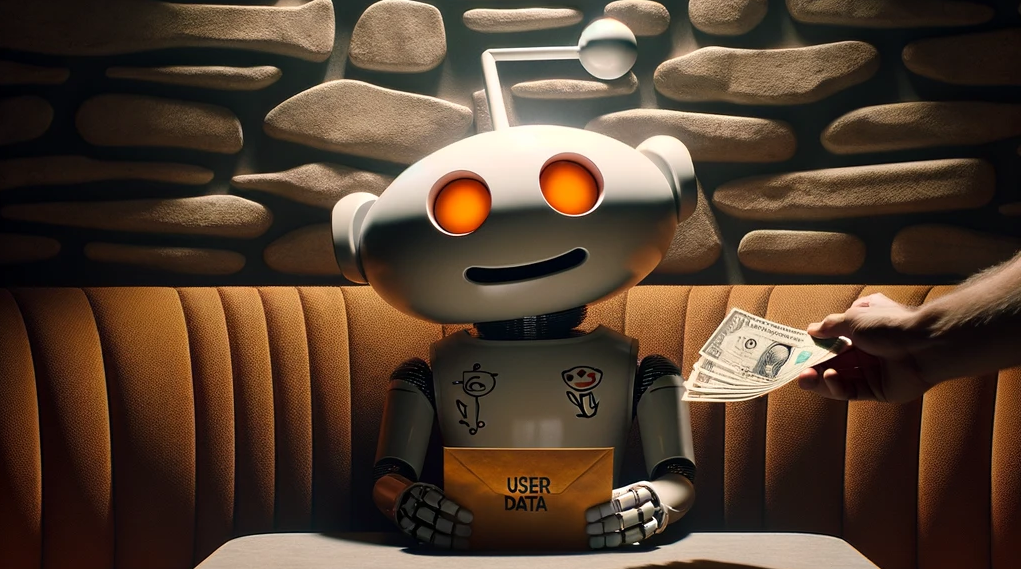

This week, Reddit announced a new licensing agreement that provides an unspecified major AI company access to its user-generated content platform. This move, likely a component of its broader strategy before an initial public offering, signifies a potential shift in how AI companies approach data collection. Rather than relying on the controversial method of web scraping, companies may now start utilizing datasets comprised of user-generated content. Furthermore, this transition mirrors a broader trend within the tech industry to formalize access to valuable data, as evidenced by similar partnerships between OpenAI, Axel Springer, and other news organizations.

The reaction to this partnership within the Reddit community and among privacy advocates has been notably mixed. On one hand, the deal could offer Reddit a stable revenue stream and potentially improve its valuation ahead of its IPO. On the other hand, concerns about data privacy and the ethical use of user-generated content have surfaced. A significant development related to this is Reddit’s revision of its API pricing policy, which has sparked widespread debate and protest within its community. The new policy, which charges developers for high-volume access to its API, aims to curb the free scraping of its data for AI training, among other uses. This decision has led to the shutdown of several third-party apps reliant on Reddit’s data and triggered a blackout protest by thousands of subreddits, highlighting the community’s vital role and its potential leverage against the platform’s policy changes.

Amidst this evolving landscape, the unauthorized use of content has also sparked significant legal and ethical debates, particularly regarding the materials used to train artificial intelligence models. This issue has been brought into sharp focus by litigation against major tech companies like Google, underscoring the ongoing conflict between the drive for technological advancement and the protection of copyright principles. Notably a legal action against Google has accused the company of harnessing data from the web for its AI products, like Bard and Imagen, without sufficiently considering alternatives to sourcing such data, raising pivotal copyright infringement questions. This scenario underscores a broader concern: the potential for AI to utilize copyrighted content in ways that might infringe upon the original creators’ rights.

Moreover, the discourse extends beyond copyright issues to encompass privacy and data protection concerns. The indiscriminate harvesting of personal and sensitive data for AI purposes has alarmed many, prompting entities like the OECD to call for a paradigm shift. The emphasis is on a human-centric approach to AI regulation, advocating for the development of international standards that protect fundamental rights in an AI-dominated era. This includes tackling the ethical and legal nuances of data scraping to promote innovation while safeguarding privacy and other critical rights.

Looking forward, the Reddit deal may herald a new era of formal partnerships between content platforms and AI companies. Such arrangements could pave the way for more transparent, ethical, and legally compliant practices in AI training, balancing innovation with respect for user privacy and copyright laws. However, this shift also raises questions about the accessibility of data for AI research and development, potentially concentrating power in the hands of a few large entities capable of negotiating these deals.

As AI technologies continue to evolve, the need for clear regulatory frameworks and ethical guidelines becomes increasingly apparent. The Reddit case serves as a critical example of the ongoing dialogue between technology developers, content creators, and the broader public about the future of AI and the stewardship of the digital commons.

Leave a comment